Simio: Makes it Easy to Design and Operate!

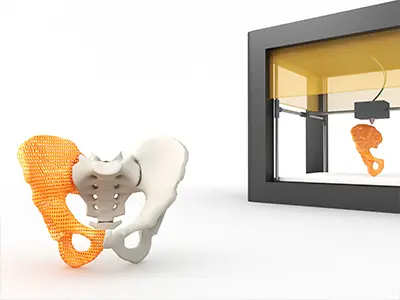

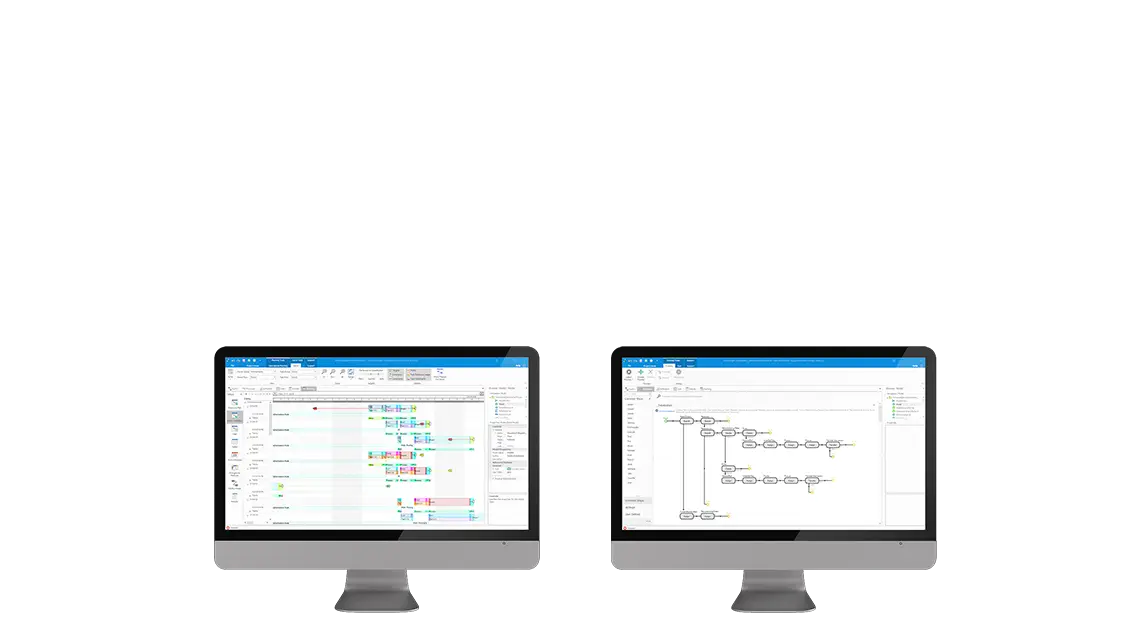

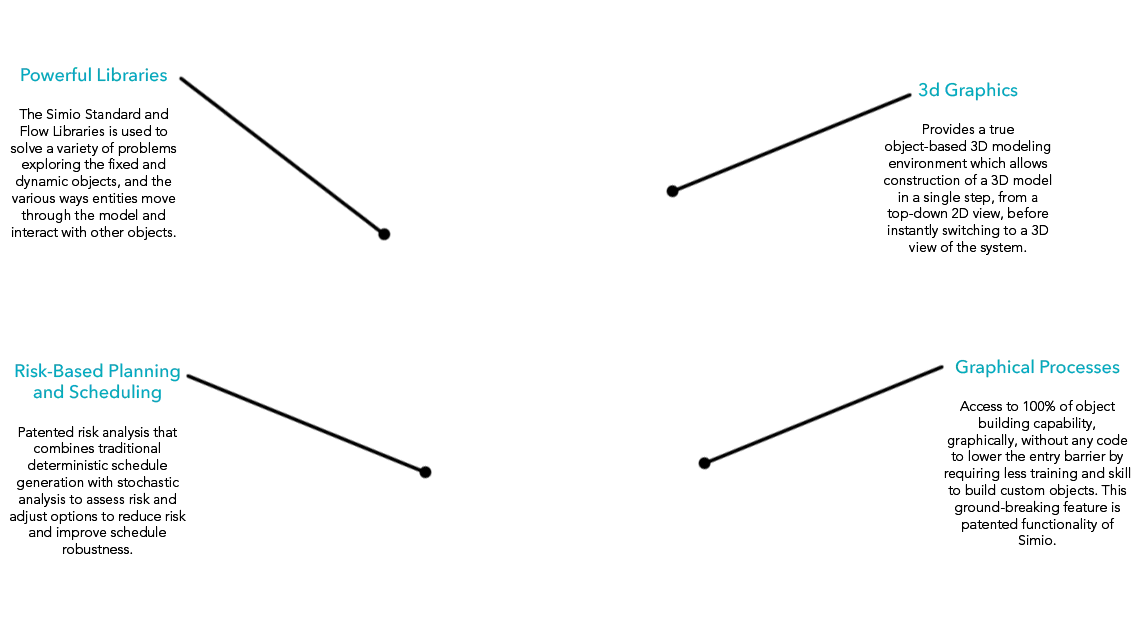

Between patented features and intuitive interface, Simio helps to make decisions easier and allows you to visualize in 3d.

Industries

Simio thrives in creating an abstract representation (a model) to represent important aspects of the real world in industries, such as:Simio Success

Here are some examples to prove Simio will work for you.Free Simio Trial Edition Download

Simio Trial Edition is a fully functional version of our award winning, patented software: a no-cost version of Simio that expires thirty (30) days after installation. Models built with the Simio Trial Edition can be imported into any of our commerical flagship products, when using the same computer.

Download NowSimio Digital Twin

Simio provides a systematic road-map to improve your factory operations, to synchronize and fix the information contained in your Enterprise systems and to harmonize people and processes to finally deliver a production schedule which is fully executable.

Discover HowSimio Academic Program

Why research and teach with outdated technology when you can join over 800 global universities employing Simio with the free Simio grant? We supply all the resources to make the conversion easy including online teaching resources for a virtual classroom!

Read More